Some years ago, I had traced out two decades of the ‘confusingly confounding’ regulation of software patents in India. The “ping-ponging” journey traced in that post seems to have been a precursor for the judicial fragmentation that Section 3(k)’s interpretation would soon see. Last week, as a few of us (Bharathwaj Ramakrishnan, Yogesh Byadwal, Anushka Dhankar and myself) were putting together comments for the 2025 Draft Computer Related Inventions (CRI) Guidelines and after some grueling work going through as many orders as we could from the recent few years – it really stood out to us as to how many different ‘tests’ were being used in interpreting Section 3(k).

There is a lot to say on CRIs, from detailed policy based critiques, to more nuanced internal critiques such as on disclosure requirements and algorithmic transparency. However, for the limited purposes of this call for comments, we decided to eschew all of that and ask for the simple (yet perhaps unlikely) “disclosure” of how fragmented the interpretative landscape of Section 3(k) has been. After all, the patent office is bound by judicial precedent. If judicial precedent doesn’t demonstrate a clear way forward (with clear reasoning), then the question of adequately following processes in examining patent applications gets stymied at the get go. In the draft guidelines, some excerpts from some cases are mentioned. However, why are those mentioned in the sections they are? What about those cases is authoritative in terms of guidance that can be taken? What about the ones, from equal judicial authority, that are not mentioned? Why are some being chosen over others?

The Patent Office it seems has been put in a difficult place on this front. After all, they are unlikely to want to be the ones to point out this fragmentation. Yet, if this fragmentation is not acknowledged, what is the way forward for examiners/controllers when they need to lay out the interpretation and reasoning that they are so often (rightly) criticised for not giving?

Our submitted comments are available here.

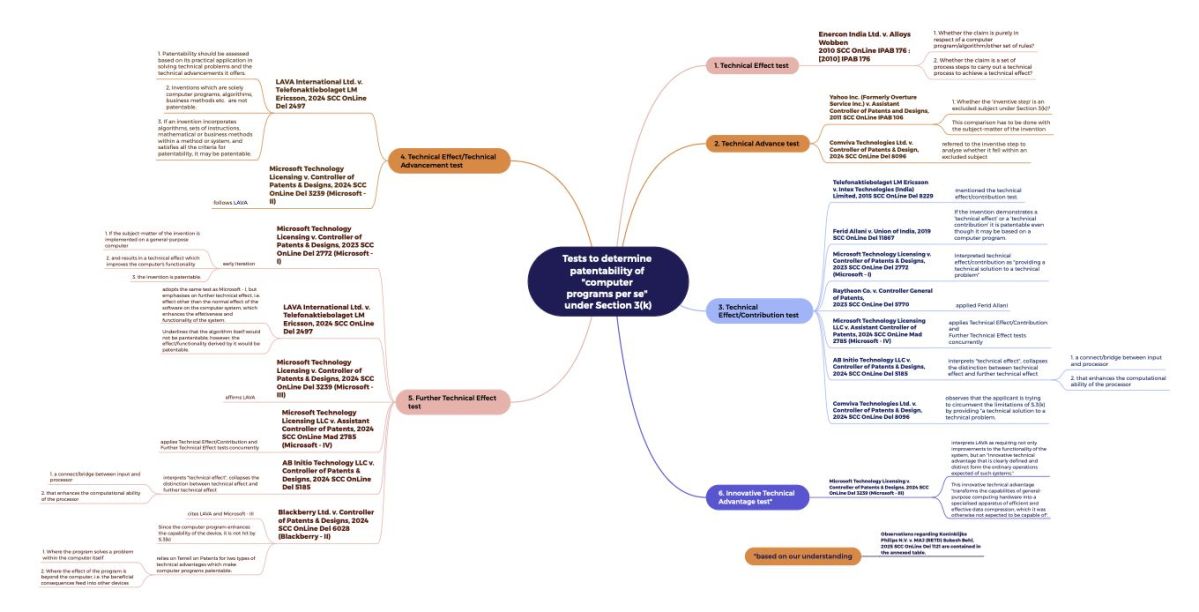

For the more immediate purposes of the above point though, the two charts below (created by Anushka) presents what we could make of the interpretative inconsistencies just over the last few years. The first chart below shows all the different ‘technical’ tests that have been used in relation to ‘computer programmes per se’ (meanwhile, to me, its still unclear what ‘technical’ even is supposed to mean, especially in the “technical” field of computers!). And the second chart below deals with the other subject matters under Section 3(k). While this area continues to evolve, we hope these (along with the annexure in the comments) are useful for anyone who is looking to understand what’s happening on this front!

(We had inserted two charts conveying the same information in our submitted comments, however, they were redesigned a bit to be made accessible as well as a bit easier to read)